You’re staring at a WordPress site with 500+ pages, and your client wants a complete audit of broken links.

You could click through every single page manually, or you could let a web crawler do the heavy lifting in about 20 minutes.

Web crawlers are automated bots that systematically browse websites, following every link they find and cataloging what they discover.

In this post, I’ll explain what web crawlers do, recommend the best tools for different situations, and show you how to use one for your next website migration.

You’ll learn:

- What web crawlers are and how they work

- Why crawlers are essential for technical SEO audits and website migrations

- The top web crawler tools for WordPress, with in-depth reviews

- How to use crawlers for pre- and post-migration site verification

Table of Contents

What Is a Web Crawler?

A web crawler (also called a spider or bot) is an internet bot that systematically browses the web to index and catalog pages.

The most famous example? Googlebot.

Googlebot starts with a list of known URLs from previous crawls and sitemaps. It visits each page, reads the content, and follows every link it finds. Those new links get added to its queue for future visits.

This process repeats endlessly, building Google’s massive index of web pages.

You can run a smaller-scale version on your own website. Instead of indexing the entire internet, your crawler maps out your site’s complete structure and content with machine-level accuracy.

No missed pages. No human error. Just a comprehensive view of everything that exists on your domain.

Why Use a Web Crawler?

The primary reason to use a web crawler is for technical SEO audits.

Crawlers excel at identifying broken links, faulty redirects that send users in circles, missing or duplicate page titles, empty meta descriptions, and thin content pages that may be harming your rankings.

Content auditing is another major use case.

Crawlers frequently uncover forgotten pages that can harm your SEO, like auto-generated tag archives, old landing pages, or duplicate content that has accumulated over time. These pages often fly under the radar during manual audits but show up immediately in a comprehensive crawl.

But here’s where crawlers become absolutely critical: website migrations.

When you’re moving a WordPress site to a new domain or server, a crawler creates a complete map of your old site. You can then compare this against your new site to verify that every page, every redirect, and every important file made the journey successfully.

Without this verification step, you’re basically crossing your fingers and hoping nothing got lost in translation.

Our Web Crawler Recommendations for WordPress

The right crawler depends on your technical comfort level and what you’re trying to accomplish.

Some are built for SEO professionals. Others cater to business owners who just want to point, click, and get results.

Here’s my breakdown of the best options:

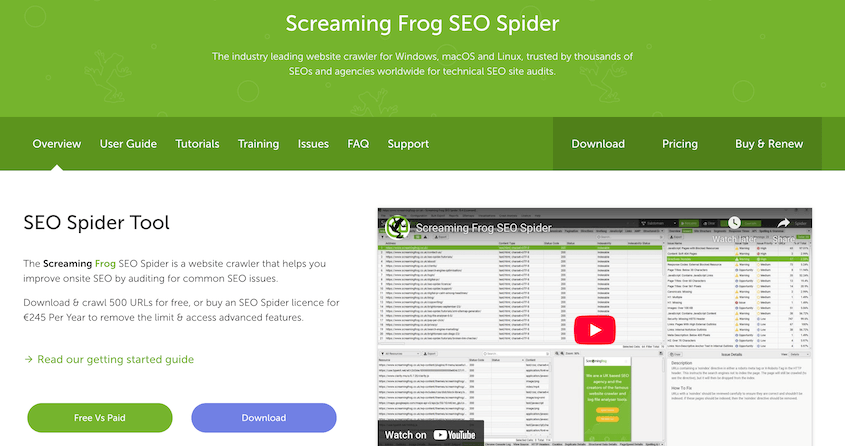

- Screaming Frog SEO Spider: Desktop app that’s the gold standard for technical SEO pros, free up to 500 URLs

- Webscraper.io: Chrome extension for quick data extraction tasks without software installation

- Semrush Site Audit: Comprehensive crawler within the full Semrush SEO platform

- Ahrefs Site Audit: Fast crawler with excellent visualization, free up to 5,000 pages monthly

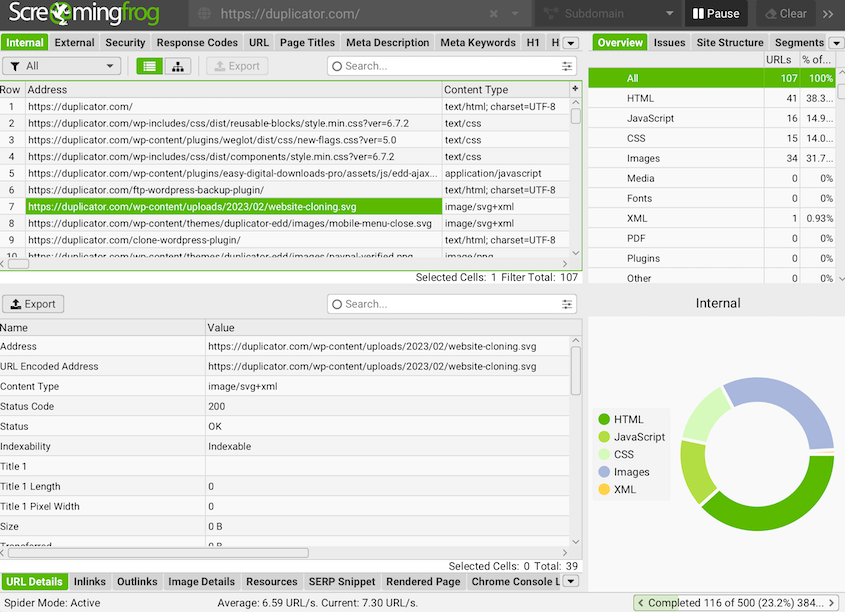

Screaming Frog crawls your site to find broken links, audit redirects, analyze page titles and meta descriptions, and extract specific data using CSS Path or XPath selectors. It can handle JavaScript rendering, follow external links, and export everything to CSV for further analysis.

This desktop application (available for Windows, macOS, and Ubuntu) has been the gold standard for technical SEO professionals for years.

The free version crawls up to 500 URLs, which covers most small to medium WordPress sites. For larger sites, the paid license removes that limit and adds features like custom extraction, Google Analytics integration, and scheduled crawls.

The interface can feel overwhelming at first. However, I find that the depth of data it provides is unmatched.

Webscraper.io is a Chrome extension that focuses on quick data extraction tasks.

The convenience factor is huge here—no software to install, no complex setup. You create a “sitemap” (their term for a scraping plan) directly in your browser, telling it which elements to click and what data to extract.

Webscraper.io is perfect for smaller jobs like grabbing a list of blog post titles from a competitor’s site or collecting product information from a few pages. The visual selector makes it easy to target exactly what you need.

The free version handles basic scraping tasks. Paid plans add cloud-based crawling, scheduled runs, and API access for integrating the data into other tools..

Semrush is one of the most popular SEO audit platforms. It provides comprehensive toolkits for marketers and businesses to grow their SEO.

For on-page and technical SEO, Semrush provides a Site Audit tool. This is powered by crawling technology.

Semrush will crawl your website, looking for health issues like duplicate content, missing title tags, broken images, and other errors. Once you know about these issues, you can fix them.

If you’re already paying for Semrush, the Site Audit crawler is included. However, Semrush plans start at $117/month, so it’s probably not worth subscribing just for the crawler unless you plan to use the other SEO tools too.

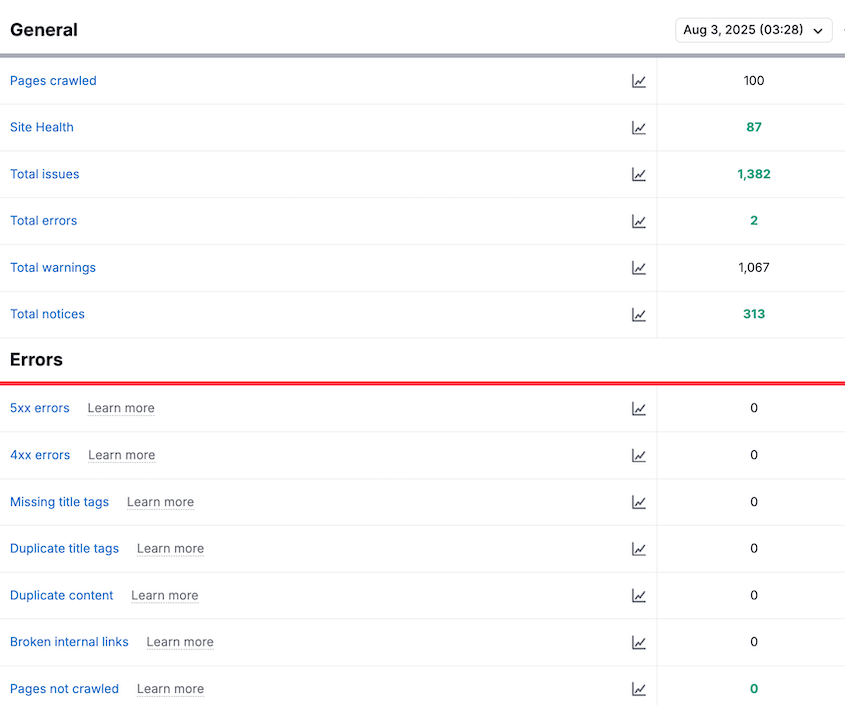

Like Semrush, Ahrefs runs a comprehensive Site Audit within its SEO toolkit.

The crawler is fast, and the interface does an excellent job of visualizing technical errors. It gives you an overall SEO score and a list of potential problems.

Plus, it integrates with other Ahrefs tools like the Rank Tracker and Site Explorer. You can see how technical issues correlate with ranking performance and backlink profiles.

You can use Ahrefs’s Site Audit for free up to 5,000 pages crawled monthly.

How to Use a Web Crawler for a WordPress Migration

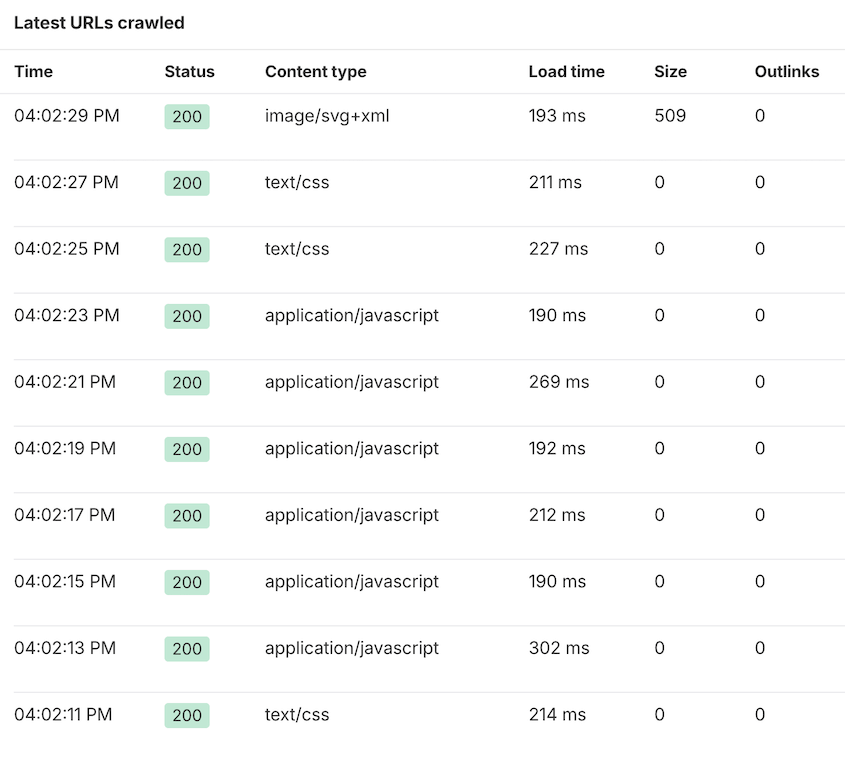

Here’s where crawlers prove their worth. A proper migration involves two crawls: one before you move the site and one after.

The Pre-Migration Benchmark Crawl

As a first step before you migrate your website, create a complete inventory of your current site by crawling it.

Fire up your chosen crawler and run it on your source site. Configure it to capture the URL, HTTP status code, page title, meta description, H1 tags, and word count for every page it finds.

Export all this data to a spreadsheet. This becomes your definitive record of what your site looked like before the migration.

The Post-Migration Validation Crawl

After you’ve moved your site to its new home, run the same crawl configuration on your destination site.

Now comes the detective work: compare the two spreadsheets. Use Excel’s VLOOKUP function (or similar tools in Google Sheets) to cross-reference the data.

Look for pages that returned 200 status codes on the old site but are throwing 404s on the new one. Check that your redirects are working properly—a 301 redirect on the old site should still be a 301 redirect on the new site. Verify that page titles and meta descriptions made the journey intact.

This comparison process catches migration issues that might otherwise go unnoticed for months.

Frequently Asked Questions (FAQs)

Is web crawling legal?

Generally, yes—crawling publicly accessible web pages is legal if you respect robots.txt and crawl at a reasonable pace. However, some sites prohibit crawling in their terms of service. When in doubt, crawl your own sites or get explicit permission.

What’s the difference between a web crawler, scraper, and spider?

A crawler (or spider) discovers and visits web pages by following links, while a scraper extracts specific data from those pages. Most modern tools do both functions. Understanding the distinction helps when evaluating different tools for specific tasks.

What are the types of web crawlers?

Web crawlers fall into four main categories: technical SEO crawlers (like Screaming Frog), data extraction tools (like Octoparse), integrated suite crawlers (built into SEO platforms), and developer frameworks (like Scrapy). Each serves different needs and skill levels.

What’s the best free web crawler?

For technical SEO work, Screaming Frog’s free tier handles up to 500 URLs. For quick data extraction, the Webscraper.io browser extension works well without software installation.

Will a crawler slow down or harm my website?

An aggressive crawler can slow down your site like a traffic spike, especially on shared hosting. Good crawling tools let you control crawl speed with delays between requests and connection limits. Always use these settings on production sites.

How do I control which web crawlers can access my site?

The robots.txt file tells crawlers which parts of your site they can access. Place it at yoursite.com/robots.txt to block specific crawlers or restrict directory access. Keep in mind that well-behaved crawlers respect it, but malicious bots can ignore it.

Can AutoGPT do web scraping?

AutoGPT can write code for web scrapers using libraries like Scrapy, but it doesn’t perform the crawling itself. Think of it as a coding assistant that helps build scraping tools. You still need to run the generated code to actually crawl websites.

Your Action Plan: Choosing the Right Web Crawler

Here’s how to pick the right crawler for your situation:

- Choose Screaming Frog if you’re doing hands-on SEO work or running an agency.

- Go with Webscraper.io if you need to extract specific data but don’t want to mess with complex interfaces.

- Select Ahrefs or Semrush Site Audit if you’re already using their SEO platforms.

You can run the most thorough crawler audit in the world, but if your migration tool drops files, breaks databases, or corrupts your site structure, all that preparation becomes pointless.

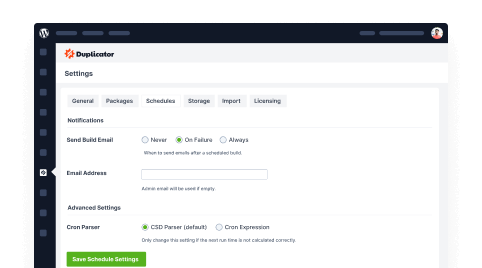

That’s why I recommend pairing your crawler with Duplicator Pro. While your crawler handles the before-and-after verification, Duplicator Pro handles the actual heavy lifting of moving your WordPress site.

Ready to upgrade your migration workflow? Try Duplicator Pro today and see why thousands of WordPress professionals trust it with their site moves.

While you’re here, I think you’ll like these hand-picked WordPress resources:

Joella is a writer with years of experience in WordPress. At Duplicator, she specializes in site maintenance — from basic backups to large-scale migrations. Her ultimate goal is to make sure your WordPress website is safe and ready for growth.